Docker Machine Xhyve

This section lists terms and definitions you should be familiar with before getting deeper into Docker. For further definitions, see the extensive glossary provided by Docker.

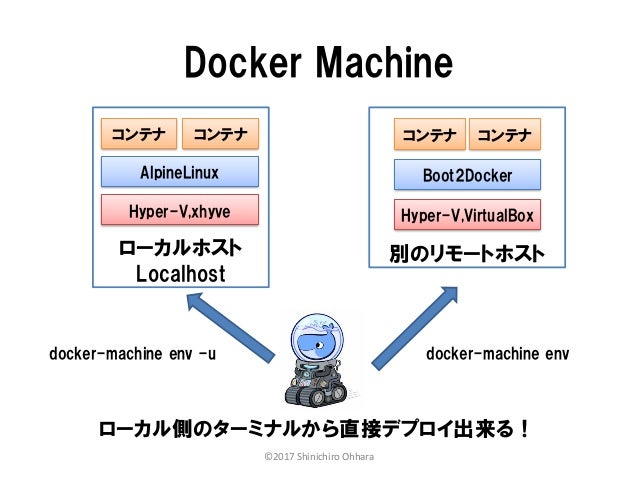

Docker Engine 1.12 introduced a new swarm mode for natively managing a cluster of Docker Engines called a swarm. Docker swarm mode implements Raft Consensus Algorithm and does not require using external key value store anymore, such as Consul or etcd. If you want to run a swarm cluster on a developer’s machine, there are several options. Docker CE for Mac is based on the Apple Hypervisor framework and the xhyve hypervisor, which provides a Linux Docker host virtual machine on macOS X. Docker CE for Windows and for Mac replaces Docker Toolbox, which was based on Oracle VirtualBox.

Container image: A package with all the dependencies and information needed to create a container. An image includes all the dependencies (such as frameworks) plus deployment and execution configuration to be used by a container runtime. Usually, an image derives from multiple base images that are layers stacked on top of each other to form the container's filesystem. An image is immutable once it has been created.

Dockerfile: A text file that contains instructions for building a Docker image. It's like a batch script, the first line states the base image to begin with and then follow the instructions to install required programs, copy files, and so on, until you get the working environment you need.

Build: The action of building a container image based on the information and context provided by its Dockerfile, plus additional files in the folder where the image is built. You can build images with the following Docker command:

Container: An instance of a Docker image. A container represents the execution of a single application, process, or service. It consists of the contents of a Docker image, an execution environment, and a standard set of instructions. When scaling a service, you create multiple instances of a container from the same image. Or a batch job can create multiple containers from the same image, passing different parameters to each instance.

Volumes Adobe fuse mac download windows 10. : Offer a writable filesystem that the container can use. Since images are read-only but most programs need to write to the filesystem, volumes add a writable layer, on top of the container image, so the programs have access to a writable filesystem. The program doesn't know it's accessing a layered filesystem, it's just the filesystem as usual. Volumes live in the host system and are managed by Docker.

Tag: A mark or label you can apply to images so that different images or versions of the same image (depending on the version number or the target environment) can be identified.

Multi-stage Build: Is a feature, since Docker 17.05 or higher, that helps to reduce the size of the final images. In a few sentences, with multi-stage build you can use, for example, a large base image, containing the SDK, for compiling and publishing the application and then using the publishing folder with a small runtime-only base image, to produce a much smaller final image.

Repository (repo): A collection of related Docker images, labeled with a tag that indicates the image version. Some repos contain multiple variants of a specific image, such as an image containing SDKs (heavier), an image containing only runtimes (lighter), etc. Those variants can be marked with tags. A single repo can contain platform variants, such as a Linux image and a Windows image.

Registry: A service that provides access to repositories. The default registry for most public images is Docker Hub (owned by Docker as an organization). A registry usually contains repositories from multiple teams. Companies often have private registries to store and manage images they've created. Azure Container Registry is another example.

Multi-arch image: For multi-architecture, it's a feature that simplifies the selection of the appropriate image, according to the platform where Docker is running. For example, when a Dockerfile requests a base image mcr.microsoft.com/dotnet/sdk:5.0 from the registry, it actually gets 5.0-nanoserver-1909, 5.0-nanoserver-1809 or 5.0-buster-slim, depending on the operating system and version where Docker is running.

Docker Hub: A public registry to upload images and work with them. Docker Hub provides Docker image hosting, public or private registries, build triggers and web hooks, and integration with GitHub and Bitbucket.

Azure Container Registry: A public resource for working with Docker images and its components in Azure. This provides a registry that's close to your deployments in Azure and that gives you control over access, making it possible to use your Azure Active Directory groups and permissions.

Docker Trusted Registry (DTR): A Docker registry service (from Docker) that can be installed on-premises so it lives within the organization's datacenter and network. It's convenient for private images that should be managed within the enterprise. Docker Trusted Registry is included as part of the Docker Datacenter product. For more information, see Docker Trusted Registry (DTR).

Docker Community Edition (CE): Development tools for Windows and macOS for building, running, and testing containers locally. Docker CE for Windows provides development environments for both Linux and Windows Containers. The Linux Docker host on Windows is based on a Hyper-V virtual machine. The host for Windows Containers is directly based on Windows. Docker CE for Mac is based on the Apple Hypervisor framework and the xhyve hypervisor, which provides a Linux Docker host virtual machine on macOS X. Docker CE for Windows and for Mac replaces Docker Toolbox, which was based on Oracle VirtualBox.

Docker Enterprise Edition (EE): An enterprise-scale version of Docker tools for Linux and Windows development.

Compose: A command-line tool and YAML file format with metadata for defining and running multi-container applications. You define a single application based on multiple images with one or more .yml files that can override values depending on the environment. After you've created the definitions, you can deploy the whole multi-container application with a single command (docker-compose up) that creates a container per image on the Docker host.

Cluster: A collection of Docker hosts exposed as if it were a single virtual Docker host, so that the application can scale to multiple instances of the services spread across multiple hosts within the cluster. Docker clusters can be created with Kubernetes, Azure Service Fabric, Docker Swarm and Mesosphere DC/OS.

Orchestrator: A tool that simplifies management of clusters and Docker hosts. Orchestrators enable you to manage their images, containers, and hosts through a command-line interface (CLI) or a graphical UI. You can manage container networking, configurations, load balancing, service discovery, high availability, Docker host configuration, and more. An orchestrator is responsible for running, distributing, scaling, and healing workloads across a collection of nodes. Typically, orchestrator products are the same products that provide cluster infrastructure, like Kubernetes and Azure Service Fabric, among other offerings in the market.

Elasticsearch is also available as Docker images.The images use centos:8 as the base image.

A list of all published Docker images and tags is available atwww.docker.elastic.co. The source filesare inGithub.

This package contains both free and subscription features.Start a 30-day trial to try out all of the features.

Obtaining Elasticsearch for Docker is as simple as issuing a docker pull commandagainst the Elastic Docker registry.

To start a single-node Elasticsearch cluster for development or testing, specifysingle-node discovery to bypass the bootstrap checks:

Starting a multi-node cluster with Docker Composeedit

To get a three-node Elasticsearch cluster up and running in Docker,you can use Docker Compose:

This sample docker-compose.yml file uses the ES_JAVA_OPTSenvironment variable to manually set the heap size to 512MB. We do not recommendusing ES_JAVA_OPTS in production. See Manually set the heap size.

This sample Docker Compose file brings up a three-node Elasticsearch cluster.Node es01 listens on localhost:9200 and es02 and es03 talk to es01 over a Docker network.

Please note that this configuration exposes port 9200 on all network interfaces, and given howDocker manipulates iptables on Linux, this means that your Elasticsearch cluster is publically accessible,potentially ignoring any firewall settings. If you don’t want to expose port 9200 and instead usea reverse proxy, replace 9200:9200 with 127.0.0.1:9200:9200 in the docker-compose.yml file.Elasticsearch will then only be accessible from the host machine itself.

The Docker named volumesdata01, data02, and data03 store the node data directories so the data persists across restarts.If they don’t already exist, docker-compose creates them when you bring up the cluster.

Make sure Docker Engine is allotted at least 4GiB of memory.In Docker Desktop, you configure resource usage on the Advanced tab in Preference (macOS)or Settings (Windows).

Docker Compose is not pre-installed with Docker on Linux.See docs.docker.com for installation instructions:Install Compose on Linux

Run

docker-composeto bring up the cluster:Submit a

_cat/nodesrequest to see that the nodes are up and running:

Log messages go to the console and are handled by the configured Docker logging driver.By default you can access logs with docker logs. If you would prefer the Elasticsearchcontainer to write logs to disk, set the ES_LOG_STYLE environment variable to file.This causes Elasticsearch to use the same logging configuration as other Elasticsearch distribution formats.

To stop the cluster, run docker-compose down.The data in the Docker volumes is preserved and loadedwhen you restart the cluster with docker-compose up.To delete the data volumes when you bring down the cluster,specify the -v option: docker-compose down -v.

See Encrypting communications in an Elasticsearch Docker Container andRun the Elastic Stack in Docker with TLS enabled.

The following requirements and recommendations apply when running Elasticsearch in Docker in production.

The vm.max_map_count kernel setting must be set to at least 262144 for production use.

How you set vm.max_map_count depends on your platform:

Linux

The

vm.max_map_countsetting should be set permanently in/etc/sysctl.conf:To apply the setting on a live system, run:

macOS with Docker for Mac

The

vm.max_map_countsetting must be set within the xhyve virtual machine:From the command line, run:

Press enter and use`sysctl` to configure

vm.max_map_count:- To exit the

screensession, typeCtrl a d.

Windows and macOS with Docker Desktop

The

vm.max_map_countsetting must be set via docker-machine:Windows with Docker Desktop WSL 2 backend

The

vm.max_map_countsetting must be set in the docker-desktop container:

Configuration files must be readable by the elasticsearch useredit

By default, Elasticsearch runs inside the container as user elasticsearch usinguid:gid 1000:0.

One exception is Openshift,which runs containers using an arbitrarily assigned user ID.Openshift presents persistent volumes with the gid set to 0, which works without any adjustments.

If you are bind-mounting a local directory or file, it must be readable by the elasticsearch user.In addition, this user must have write access to the config, data and log dirs(Elasticsearch needs write access to the config directory so that it can generate a keystore).A good strategy is to grant group access to gid 0 for the local directory.

For example, to prepare a local directory for storing data through a bind-mount:

You can also run an Elasticsearch container using both a custom UID and GID. Unless youbind-mount each of the config, data` and logs directories, you must passthe command line option --group-add 0 to docker run. This ensures that the userunder which Elasticsearch is running is also a member of the root (GID 0) group inside thecontainer.

As a last resort, you can force the container to mutate the ownership ofany bind-mounts used for the data and log dirs through theenvironment variable TAKE_FILE_OWNERSHIP. When you do this, they will be owned byuid:gid 1000:0, which provides the required read/write access to the Elasticsearch process.

Increased ulimits for nofile and nprocmust be available for the Elasticsearch containers.Verify the init systemfor the Docker daemon sets them to acceptable values.

To check the Docker daemon defaults for ulimits, run:

If needed, adjust them in the Daemon or override them per container.For example, when using docker run, set:

Swapping needs to be disabled for performance and node stability.For information about ways to do this, see Disable swapping.

If you opt for the bootstrap.memory_lock: true approach,you also need to define the memlock: true ulimit in theDocker Daemon,or explicitly set for the container as shown in the sample compose file.When using docker run, you can specify:

The image exposesTCP ports 9200 and 9300. For production clusters, randomizing thepublished ports with --publish-all is recommended,unless you are pinning one container per host.

By default, Elasticsearch automatically sizes JVM heap based on a nodes’sroles and the total memory available to the node’s container. Werecommend this default sizing for most production environments. If needed, youcan override default sizing by manually setting JVM heap size.

To manually set the heap size in production, bind mount a JVMoptions file under /usr/share/elasticsearch/config/jvm.options.d thatincludes your desired heap size settings.

Docker desktop for windows 7 32-bit. For testing, you can also manually set the heap size using the ES_JAVA_OPTSenvironment variable. For example, to use 16GB, specify -eES_JAVA_OPTS='-Xms16g -Xmx16g' with docker run. The ES_JAVA_OPTS variableoverrides all other JVM options. The ES_JAVA_OPTS variable overrides all otherJVM options. We do not recommend using ES_JAVA_OPTS in production. Thedocker-compose.yml file above sets the heap size to 512MB.

Pin your deployments to a specific version of the Elasticsearch Docker image. Forexample docker.elastic.co/elasticsearch/elasticsearch:7.12.0.

You should use a volume bound on /usr/share/elasticsearch/data for the following reasons:

- The data of your Elasticsearch node won’t be lost if the container is killed

- Elasticsearch is I/O sensitive and the Docker storage driver is not ideal for fast I/O

- It allows the use of advancedDocker volume plugins

If you are using the devicemapper storage driver, do not use the default loop-lvm mode.Configure docker-engine to usedirect-lvm.

Consider centralizing your logs by using a differentlogging driver. Alsonote that the default json-file logging driver is not ideally suited forproduction use.

When you run in Docker, the Elasticsearch configuration files are loaded from/usr/share/elasticsearch/config/.

To use custom configuration files, you bind-mount the filesover the configuration files in the image.

You can set individual Elasticsearch configuration parameters using Docker environment variables.The sample compose file and thesingle-node example use this method.

To use the contents of a file to set an environment variable, suffix the environmentvariable name with _FILE. This is useful for passing secrets such as passwords to Elasticsearchwithout specifying them directly.

For example, to set the Elasticsearch bootstrap password from a file, you can bind mount thefile and set the ELASTIC_PASSWORD_FILE environment variable to the mount location.If you mount the password file to /run/secrets/bootstrapPassword.txt, specify:

You can also override the default command for the image to pass Elasticsearch configurationparameters as command line options. For example:

While bind-mounting your configuration files is usually the preferred method in production,you can also create a custom Docker imagethat contains your configuration.

Create custom config files and bind-mount them over the corresponding files in the Docker image.For example, to bind-mount custom_elasticsearch.yml with docker run, specify:

The container runs Elasticsearch as user elasticsearch usinguid:gid 1000:0. Bind mounted host directories and files must be accessible by this user,and the data and log directories must be writable by this user.

By default, Elasticsearch will auto-generate a keystore file for secure settings. Thisfile is obfuscated but not encrypted. If you want to encrypt yoursecure settings with a password, you must use theelasticsearch-keystore utility to create a password-protected keystore andbind-mount it to the container as/usr/share/elasticsearch/config/elasticsearch.keystore. In order to providethe Docker container with the password at startup, set the Docker environmentvalue KEYSTORE_PASSWORD to the value of your password. For example, a dockerrun command might have the following options:

In some environments, it might make more sense to prepare a custom image that containsyour configuration. A Dockerfile to achieve this might be as simple as:

You could then build and run the image with:

Some plugins require additional security permissions.You must explicitly accept them either by:

- Attaching a

ttywhen you run the Docker image and allowing the permissions when prompted. - Inspecting the security permissions and accepting them (if appropriate) by adding the

--batchflag to the plugin install command.

Docker Machine Hyper-v Windows 10

See Plugin managementfor more information.

Docker Machine Hyper-v

You now have a test Elasticsearch environment set up. Before you startserious development or go into production with Elasticsearch, you must do some additionalsetup:

- Learn how to configure Elasticsearch.

- Configure important Elasticsearch settings.

- Configure important system settings.

Docker Machine Xhyve Tutorial

Most Popular